Task Directed Image Understanding Challenge (TDIUC)

A more nuanced analysis of Visual Question Answering (VQA) algorithms

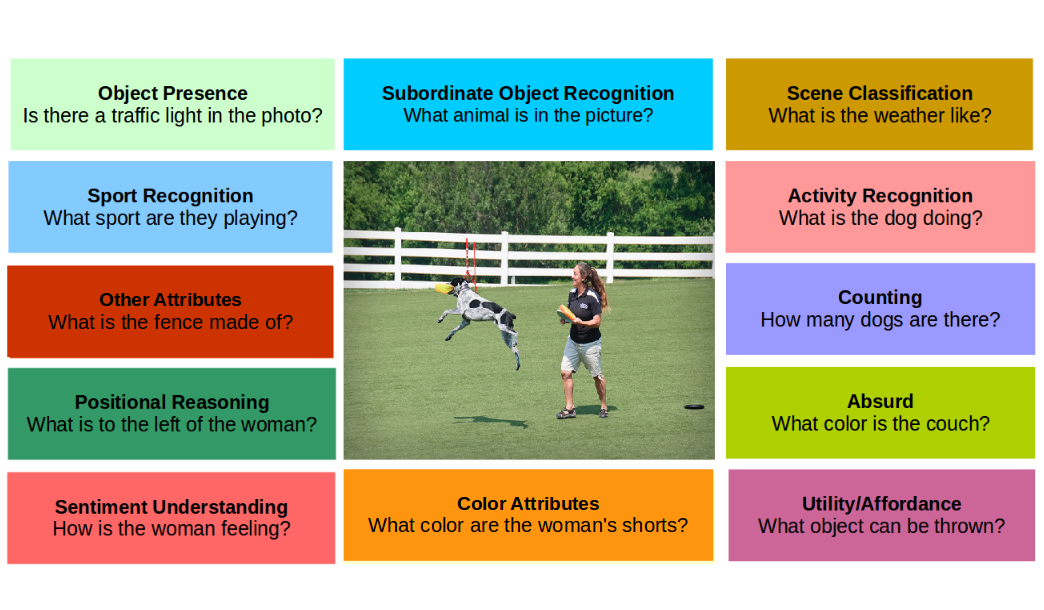

Visual Question Answering is a challenging vision and language problem that demands a wide range of language and image understanding abilities from an algorithm. However, studying the abilities of current VQA systems is very difficult due to critical problems with bias and a lack of well-annotated question-types. Task Directed Image Understanding Challenge (TDIUC) is a new dataset that divides VQA into 12 constituent tasks that makes it easier to measure and compare the performance of VQA algorithms.

TDIUC was created for multiple reasons. One of the major reasons why VQA is interesting is because it encompasses so many other computer vision problems, e.g., object detection, object classification, attribute classification, positional reasoning, counting, etc. Truly solving VQA requires an algorithm to be capable of solving all these problems. However, because prior datasets are heavily unbalanced toward certain kinds of questions, good performance on less frequent kinds of questions has negligible impact on overall performance. For example, in many datasets object presence questions are far more common than questions requiring positional reasoning, meaning that an algorithm that excels at positional reasoning is not able to showcase its abilities on these datasets. TDIUC's performance metrics compensate for this bias, so good performance on TDIUC requires good performance across kinds of questions. Another issue with other datasets is that many questions can be answered from just the question, so the algorithm ignores the image. TDIUC introduces absurd questions, that demand an algorithm look at the image to determine if the question is appropriate for the image.